pckung@umich.edu

|

I am a PhD student in Robotics at the University of Michigan–Ann Arbor (UM), working at the Ford Center for Autonomous Vehicles (FCAV) advised by Prof. Katie Skinner, focusing on NeRF and Gaussian Splatting–based scene reconstruction and SLAM using multi-sensor modalities. During my PhD, I interned at Meta Reality Labs and Latitude AI (Ford). My work at Meta focused on indoor radar-based SLAM, while at Latitude AI I developed camera–LiDAR data synthesis pipelines for autonomous driving. Previously, I worked on the Self-Driving Car Team at the Industrial Technology Research Institute (ITRI) in Taiwan, under the guidance of Prof. Chieh-Chih (Bob) Wang. I received my M.Sc. in Robotics from National Yang Ming Chiao Tung University (NYCU), where I was advised by Prof. Chieh-Chih (Bob) Wang and Prof. Wen-Chieh (Steve) Lin. Before starting my master’s studies, I had the pleasure of working with Prof. Nikolay Atanasov at UC San Diego. Prior to that, I earned my B.Sc. in Electrical Engineering from National Sun Yat-sen University (NSYSU), where I was an undergraduate research assistant advised by Prof. Kao-Shing Hwang. My research interests lie at the intersection of robotics, computer vision, machine learning, with a focus on robot perception and state estimation. In particular, I am focusing on advancing joint research in robot scene understanding, 3D reconstruction, and SLAM using multisensor modalities, including cameras, LiDAR, radar, and sonar. |

Meta Reality Lab |

Latitude AI |

U-M |

NYCU (NCTU) |

UCSD |

NSYSU |

|---|

|

|

abstract

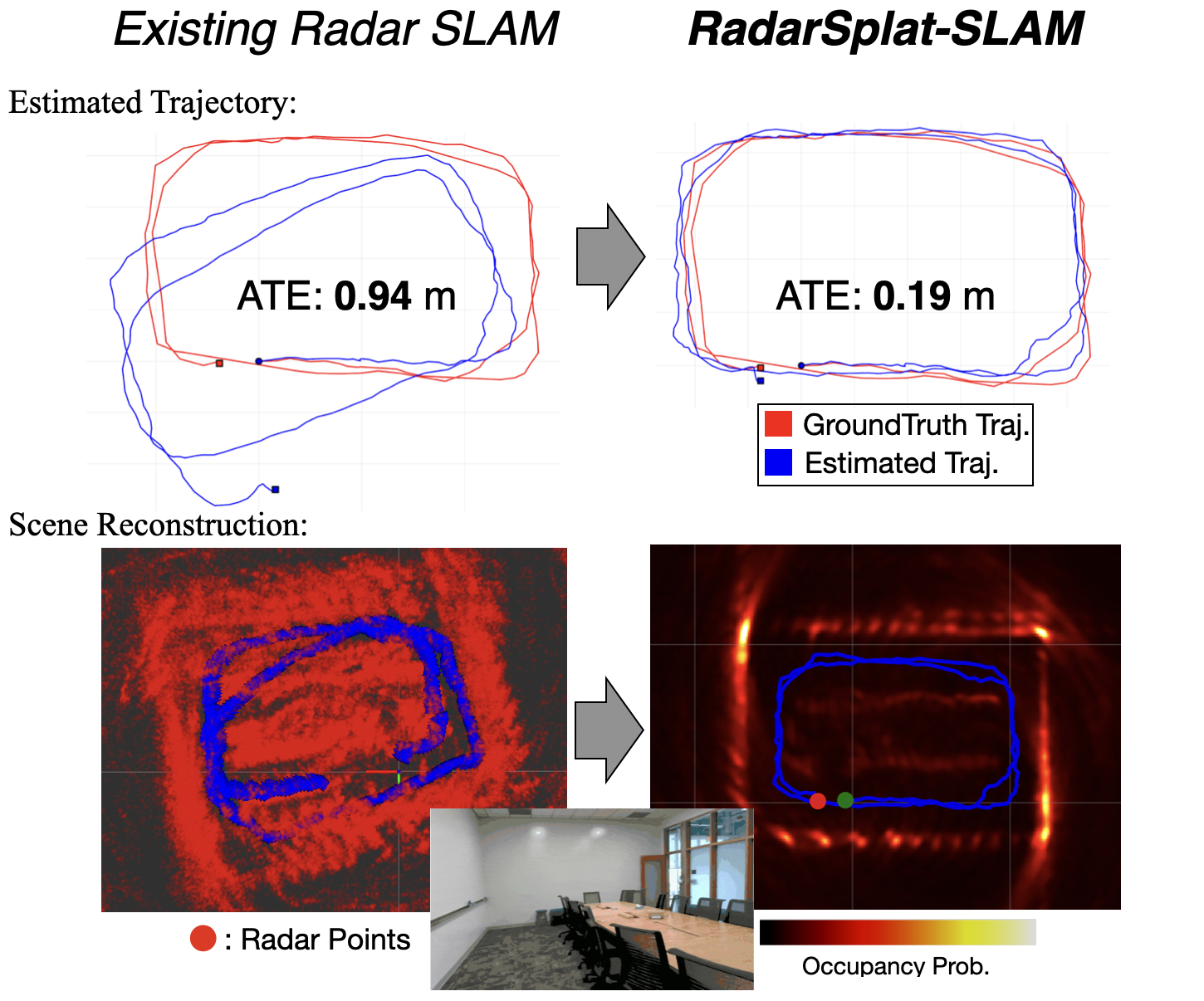

Radar is more resilient to adverse weather and lighting conditions than visual and Lidar SLAM systems, and it also offers superior privacy protection since it does not capture detailed visual information. However, most radar SLAM pipelines still rely heavily on dead-reckoning odometry, which leads to substantial drift. While loop closure can correct long-term errors, it requires revisiting places and relies on robust place recognition. In contrast, visual SLAM leverages bundle adjustment (BA) to continuously refine poses and scene structure within a local window. However, an equivalent BA formulation for radar has remained largely unexplored. We present the first radar bundle adjustment framework, enabled by Gaussian Splatting (GS), a dense and differentiable scene representation. Our method jointly optimizes radar sensor poses and scene geometry, effectively bringing the benefits of visual SLAM BA to radar for the first time. Integrated with a classical frame-to-frame radar-inertial frontend, our approach significantly reduces drift and improves robustness. Across multiple indoor environments, our radar BA achieves substantial gains over the existing radar-inertial odometry, reducing average absolute translational and rotational errors by ~90% and ~80%, respectively. |

|

abstract |

bibtex |

arXiv |

project page

High-Fidelity 3D scene reconstruction plays a crucial role in autonomous driving by enabling novel data generation from existing datasets. This allows simulating safety-critical scenarios and augmenting training datasets without incurring further data collection costs. While recent advances in radiance fields have demonstrated promising results in 3D reconstruction and sensor data synthesis using cameras and LiDAR, their potential for radar remains largely unexplored. Radar is crucial for autonomous driving due to its robustness in adverse weather conditions like rain, fog, and snow, where optical sensors often struggle. Although the state-of-the-art radar-based neural representation shows promise for 3D driving scene reconstruction, it performs poorly in scenarios with significant radar noise, including receiver saturation and multipath reflection. Moreover, it is limited to synthesizing preprocessed, noise-excluded radar images, failing to address realistic radar data synthesis. To address these limitations, this paper proposes RadarSplat, which integrates Gaussian Splatting with novel radar noise modeling to enable realistic radar data synthesis and enhanced 3D reconstruction. Compared to the state-of-the-art, RadarSplat achieves superior radar image synthesis (+3.4 PSNR / 2.6x SSIM) and improved geometric reconstruction (-40% RMSE / 1.5x Accuracy), demonstrating its effectiveness in generating high-fidelity radar data and scene reconstruction. |

|

abstract |

bibtex |

arXiv |

project page

Photorealistic 3D scene reconstruction plays an important role in autonomous driving, enabling the generation of novel data from existing datasets to simulate safety-critical scenarios and expand training data without additional acquisition costs. Gaussian Splatting (GS) facilitates real-time, photorealistic rendering with an explicit 3D Gaussian representation of the scene, providing faster processing and more intuitive scene editing than the implicit Neural Radiance Fields (NeRFs). While extensive GS research has yielded promising advancements in autonomous driving applications, they overlook two critical aspects: First, existing methods mainly focus on low-speed and feature-rich urban scenes and ignore the fact that highway scenarios play a significant role in autonomous driving. Second, while LiDARs are commonplace in autonomous driving platforms, existing methods learn primarily from images and use LiDAR only for initial estimates or without precise sensor modeling, thus missing out on leveraging the rich depth information LiDAR offers and limiting the ability to synthesize LiDAR data. In this paper, we propose a novel GS method for dynamic scene synthesis and editing with improved scene reconstruction through LiDAR supervision and support for LiDAR rendering. Unlike prior works that are tested mostly on urban datasets, to the best of our knowledge, we are the first to focus on the more challenging and highly relevant highway scenes for autonomous driving, with sparse sensor views and monotone backgrounds. |

|

abstract |

bibtex |

paper |

project page

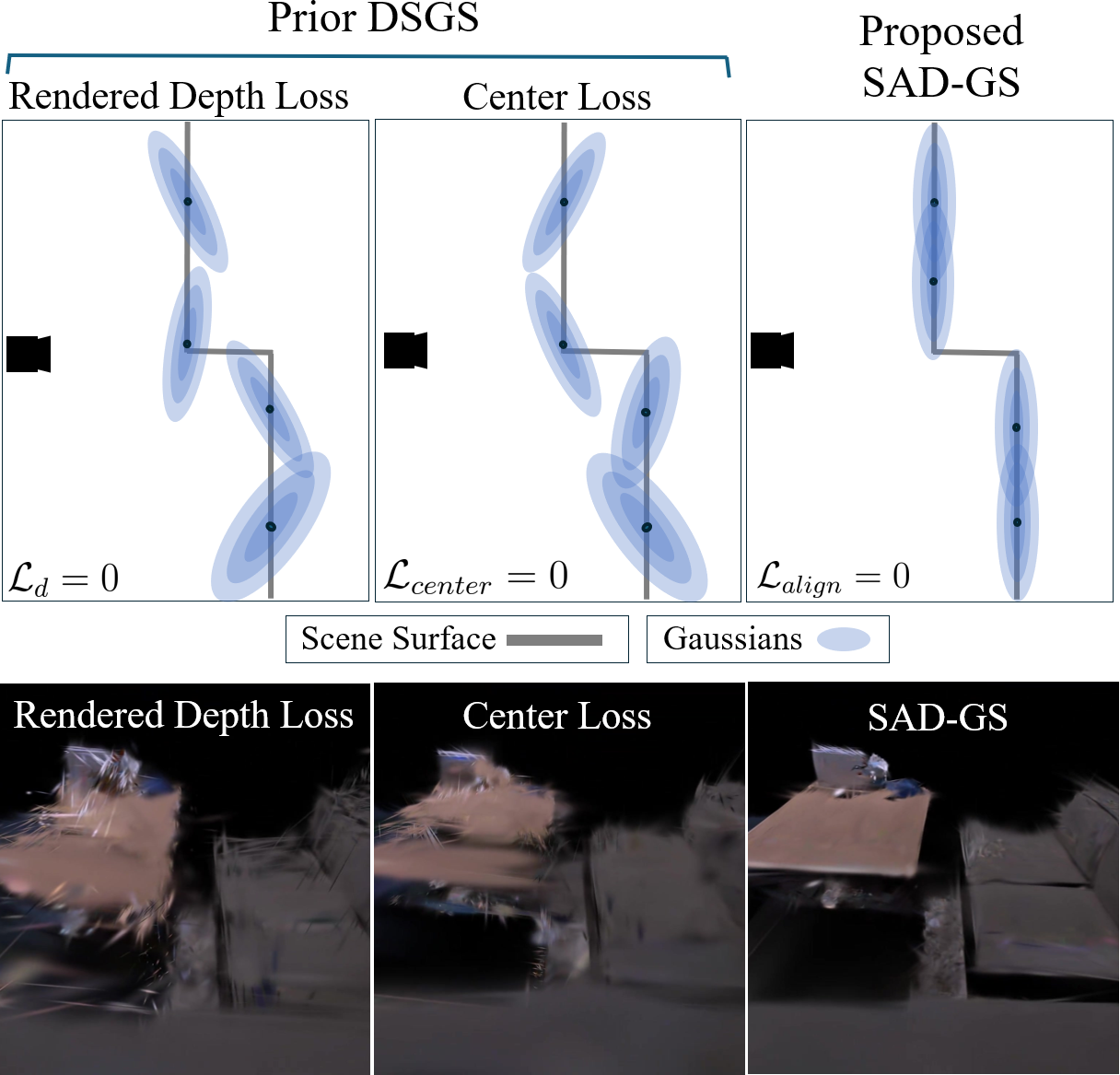

This paper proposes SAD-GS, a depth-supervised Gaussian Splatting (GS) method that provides accurate 3D geometry reconstruction by introducing a shape-aligned depth supervision strategy. Depth information is widely used in various GS applications, such as dynamic scene reconstruction, real-time simultaneous localization and mapping, and few-shot reconstruction. However, existing depth-supervised methods for GS all focus on the center and neglect the shape of Gaussians during training. This oversight can result in inaccurate surface geometry in the reconstruction and can harm downstream tasks like novel view synthesis, mesh reconstruction, and robot path planning. To address this, this paper proposes a shape-aligned loss, which aims to produce a smooth and precise reconstruction by adding extra constraints to the Gaussian shape. The proposed method is evaluated qualitatively and quantitatively on two publicly available datasets. The evaluation demonstrates that the proposed method provides state-of-the-art novel view rendering quality and mesh accuracy compared to existing depth-supervised GS methods. |

|

abstract |

bibtex |

arXiv |

project page

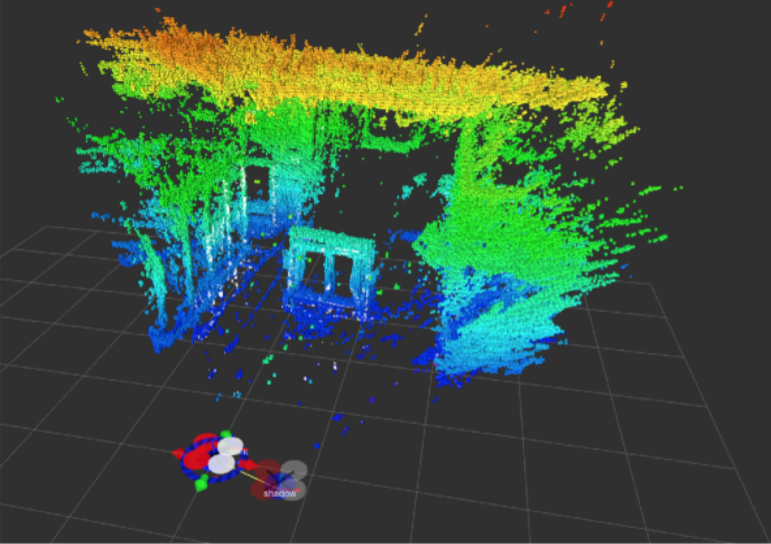

This paper proposes LONER, the first real-time LiDAR SLAM algorithm that uses a neural implicit scene representation. Existing implicit mapping methods for LiDAR show promising results in large-scale reconstruction, but either require groundtruth poses or run slower than real-time. In contrast, LONER uses LiDAR data to train an MLP to estimate a dense map in real-time, while simultaneously estimating the trajectory of the sensor. To achieve real-time performance, this paper proposes a novel information-theoretic loss function that accounts for the fact that different regions of the map may be learned to varying degrees throughout online training. The proposed method is evaluated qualitatively and quantitatively on two open-source datasets. This evaluation illustrates that the proposed loss function converges faster and leads to more accurate geometry reconstruction than other loss functions used in depthsupervised neural implicit frameworks. Finally, this paper shows that LONER estimates trajectories competitively with state-ofthe-art LiDAR SLAM methods, while also producing dense maps competitive with existing real-time implicit mapping methods that use groundtruth poses. |

|

abstract |

bibtex |

arXiv |

video

Radar shows great potential for autonomous driving by accomplishing long-range sensing under diverse weather conditions. But radar is also a particularly challenging sensing modality due to the radar noises. Recent works have made enormous progress in classifying free and occupied spaces in radar images by leveraging lidar label supervision. However, there are still several unsolved issues. Firstly, the sensing distance of the results is limited by the sensing range of lidar. Secondly, the performance of the results is degenerated by lidar due to the physical sensing discrepancies between the two sensors. For example, some objects visible to lidar are invisible to radar, and some objects occluded in lidar scans are visible in radar images because of the radar's penetrating capability. These sensing differences cause false positive and penetrating capability degeneration, respectively. |

|

abstract |

bibtex |

arXiv |

video |

presentation

Existing radar sensors can be classified into automotive and scanning radars. While most radar odometry (RO) methods are only designed for a specific type of radar, our RO method adapts to both scanning and automotive radars. Our RO is simple yet effective, where the pipeline consists of thresholding, probabilistic submap building, and an NDT-based radar scan matching. The proposed RO has been tested on two public radar datasets: the Oxford Radar RobotCar dataset and the nuScenes dataset, which provide scanning and automotive radar data respectively. The results show that our approach surpasses state-of-the-art RO using either automotive or scanning radar by reducing translational error by 51% and 30%, respectively, and rotational error by 17% and 29%, respectively. Besides, we show that our RO achieves centimeter-level accuracy as lidar odometry, and automotive and scanning RO have similar accuracy. |

|

|

|

|

|

|

abstract |

video

Radar presents a promising alternative to LiDAR in autonomous driving applications by showing several advantages, such as the ability to work under diverse weather conditions, direct doppler velocity estimation, and relatively low price.

However, 4D radar outputs a 3D heatmap instead of a precise 3D shape of the surrounded environment. Moreover, the current state-of-the-art radar feature detection method, constant false alarm rate, only output sparse radar features. |

|

abstract

Setup sensors on the vehicle and collect data in crowded urban. |

|

abstract |

webpage |

video |

slide |

code

In this project, we used Hokuyo Laser Scanner with scan matching (ICP) and factor graph optimization (GTSAM) to achieve Lidar odometry. We ran our algorithm onboard on Nvidia TX2 in Ubuntu18.04. Instead of doing consecutive Lidar scan matching only, we added the estimated transformation between the current and previous scans as constraints to optimize the robot pose. |

|

|

|

abstract |

product webpage |

video |

code

Extended Duckiebot with 2D Lidar to achieve 2D SLAM and navigation. Implemented Lidar particle filter SLAM, EKF SLAM, AMCL localization, and A* path planning on the robot. More than 200 KnightCars were saled as the teaching material in 5 colleges in a year. |

|

|

|

|

abstract

Implemented face recognition (Haar Cascades), classification (InceptionV3), tracking (KLT tracker) and PID controller on quadrotor to achieve people tracking. |

Spring 2021, TA, Human Centric Computing, NYCU

Fall 2020, TA, Self-Driving Cars, NYCU

Spring 2020, TA, Human Centric Computing, NYCU

Nov 2024, Recipient of Rackham International Students Fellowship/Chia-Lun Lo Fellowship

April 2024, RA-L'23 Best Paper Award - Standing out among over 1,200 accepted papers

Spring 2021, Recipient of The Phi Tau Phi Scholastic Honor - Only award to 130 students every year (<0.4%)

Fall 2020, NYCU Academic Achievement Award - Ranked 1st in a semester

Spring 2020, NYCU Academic Achievement Award - Ranked 1st in a semester

Fall 2019, NYCU Academic Achievement Award - Ranked 1st in a semester

Fall 2018, NSYSU College of Engineering Project Champion - First place out of 26 teams

Fall 2018, NSYSU Excellent Student Award - Top 3 students in a semester

Spring 2017, NSYSU Excellent Student Award - Top 3 students in a semester

A huge thanks to template from this. |